Introduction

We have received several questions regarding how Tanuki can be used in conjunction with web scraping and want to dedicate this post to share a couple quick strategies that we have found helpful.

We'll explore four examples for how to use Tanuki with BeautifulSoup and Selenium to scrape a website and extract the desired content fields into a pydantic class data structure:

- Quotes from their div element

- Cocktail recipe assembled from entire page

- Rental units with User-Agent to mimic browser session

- Airbnb listings with Selenium webdriver

The code for these examples can be found at the following links:

Getting started

The only environmental variable required is your OpenAI API key. (Optional) The final example requires a User-Agent header. This header helps the web scraping request mimic a web browser to avoid detection and blocking of the request.

Putting these together, your local .env file should look like:

OPENAI_API_KEY=sk-XXX

USER_AGENT=XXX

Scraping with BeautifulSoup

Beautiful Soup is a Python library that parses HTML and XML documents, allowing users to extract and manipulate data from web pages effortlessly. It navigates the document's structure, providing a simple and intuitive way to access specific elements and their content.

The first three examples covered here all use the below utility function to scrape a given url and return the parsed text:

def scrape_url(url: str, class_name: Optional[str] = None) -> List[str]:

"""Scrape the url and return the raw content(s) of all entities with a given

div class name."""

print(f"Scraping {url=} for {class_name=} entities...")

response = requests.get(url, headers=headers)

contents = []

# Parse html content if response is successful

if response.status_code == 200:

soup = BeautifulSoup(response.text, 'html.parser')

# Loop through the matched elements to get contents if a class name is provided

if class_name:

entities = soup.find_all('div', class_=class_name)

for entity in entities:

content = entity.text

content = content.lstrip().rstrip()

contents.append(content)

# Otherwise, just get the text content

else:

content = soup.text

content = content.lstrip().rstrip()

contents.append(content)

# Print error if response is unsuccessful

else:

print(response.status_code)

print(response.text)

return contents

If a "class_name" argument is provided, all div elements with that class name are found and their text content extracted. If no such argument is provided, the entire text content of the webpage is returned.

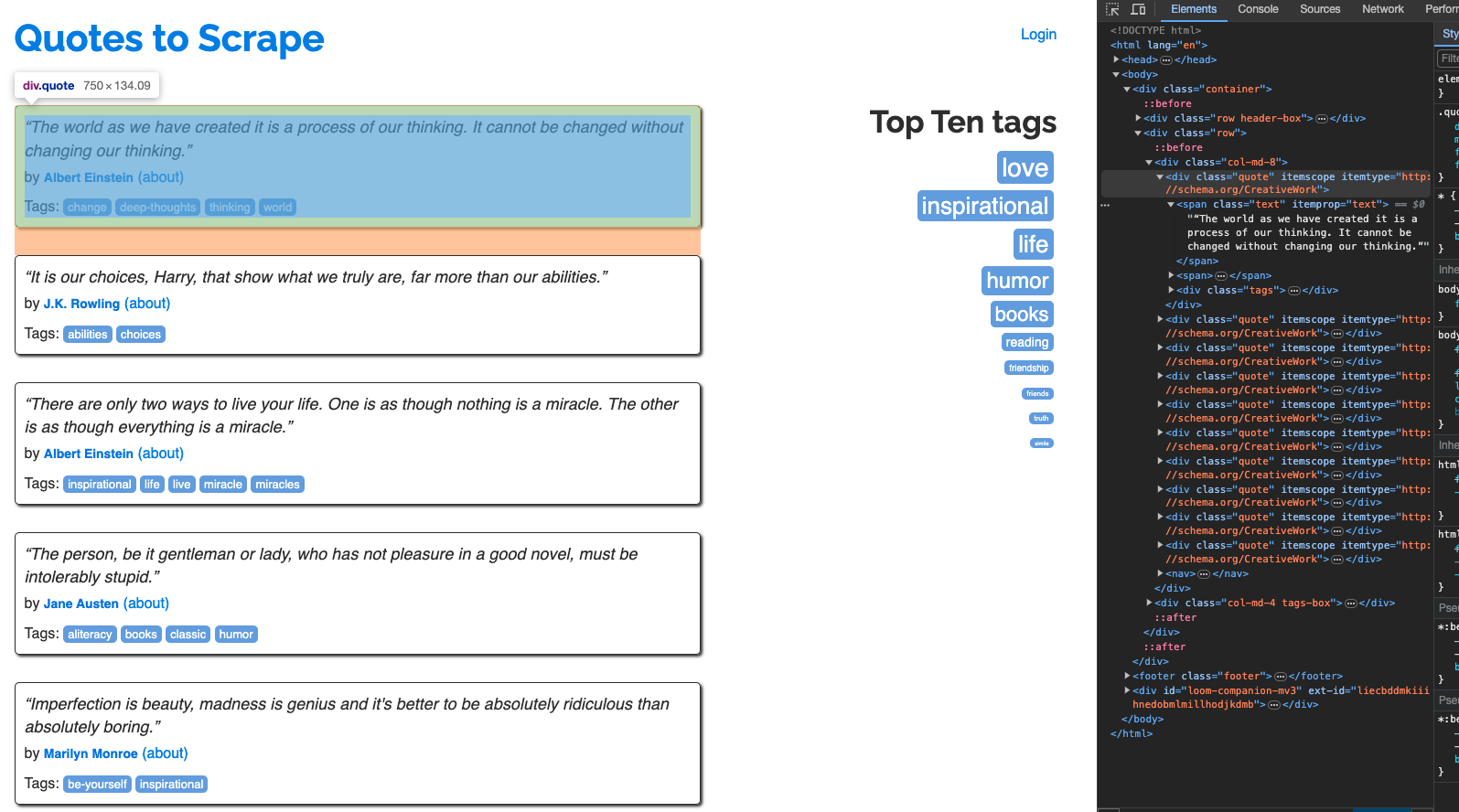

Example: Quotes by div element

Our first example uses Tanuki to scrape a webpage and return a list of quotes from Quotes to Scrape. As seen in the image below, the webpage organizes each quote into a div element with a class name of "quote". Let's pass in this argument to the "scrape_url" function defined above in order to extract the contents of these blocks directly: scrape_url(url=url, class_name="quote").

Each "quote" content element is then sent to the @tanuki.patch decorated function defined below. This function calls OpenAI in the background using the docstring statement to extract the desired quote information, while also ensuring that the resulting output conforms to the Quote class definition.

class Quote(BaseModel):

text: str

author: str

tags: List[str] = []

@tanuki.patch

def extract_quote(content: str) -> Optional[Quote]:

"""

Examine the content string and extract the quote details for the text, author, and tags.

"""

For example, the resulting output for the Albert Einstein quote block is:

Quote(

text='The world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.',

author='Albert Einstein',

tags=['change', 'deep-thoughts', 'thinking', 'world'],

)

Additional use cases of this method are also shared in the example repo here for the following sites:

Example: Cocktail ingredients from entire page

Our second example uses Tanuki to extract the contents from the raw text of an entire webpage. Here, we will extract the cocktail name, ingredients, instructions, and list of similar cocktails from Kindred Cocktails, in particular the Old-Fashioned recipe.

Since the information is scattered across the entire webpage, it's easier to pass the entire page contents to the @tanuki.patch decorated function in order to return the desired Cocktail class data structure:

class Cocktail(BaseModel):

name: str

ingredients: List[str] = []

instructions: str

similar: List[str] = []

@tanuki.patch

def extract_cocktail(content: str) -> Optional[Cocktail]:

"""

Examine the content string and extract the cocktail details for the ingredients, instructions, and similar cocktails.

"""

It's also necessary to define a @tanuki.align statement to ensure reliable performance and assert the intended behavior:

@tanuki.align

def align_extract_cocktail() -> None:

cocktail = """Black Rose | Kindred Cocktails\n\n\n\n\n\n Skip to main content\n \n\n\n\n\n\nKindred Cocktails\n\n\nToggle navigation\n\n\n\n\n\n\n\n\nMain navigation\n\n\nHome\n\n\nCocktails\n\n\nNew\n\n\nInfo \n\n\nStyle guidelines\n\n\nIngredients\n\n\n\n\n\nMeasurement units\n\n\nHistoric Cocktail Books\n\n\nRecommended Brands\n\n\nAmari & Friends\n\n\nArticles & Reviews\n\n\n\n\n\nAbout us\n\n\nLearn More\n\n\nFAQ\n\n\nTerms of Use\n\n\nContact us\n\n\n\n\nYou \n\n\nLog in\n\n\nSign Up\n\n\nReset your password\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\nHome\n\n\nCocktails\n\n\n Black Rose\n \n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\nCopy\n\n\n\n\nBlack Rose\n \n\n\n\n\n\n\n\n\n\n2 oz Bourbon\n\n1 ds Grenadine\n\n2 ds Peychaud's Bitters\n\n1 Lemon peel (flamed, for garnish)\n\n\n\nInstructions\nFill an old-fashioned glass three-quarters full with ice. Add the bourbon, grenadine, and bitters, and stir. Garnish with the lemon peel.\n\n\n\n\n\n\nCocktail summary\n\n\n\nPosted by\nThe Boston Shaker\n on \n4/12/2011\n\n\n\n\nIs of\nunknown authenticity\n\n\nReference\nDale Degroff, The Essential Cocktail, p48\n\n\n\nCurator\nNot yet rated\n\n\nAverage\n3.5 stars (6 ratings)\n\n\n\nYieldsDrink\n\n\nScale\n\n\nBourbon, Peychaud's Bitters, Grenadine, Lemon peel\nPT5M\nPT0M\nCocktail\nCocktail\n1\ncraft, alcoholic\n3.66667\n6\n\n\n\n\n\n\n\n\n\n\nCocktail Book\n\nLog in or sign up to start building your Cocktail Book.\n\n\n\n\nFrom other usersWith a modest grenadine dash, this drink didn't do much for me, but adding a bit more won me over.\nSimilar cocktailsNew Orleans Cocktail — Bourbon, Peychaud's Bitters, Orange Curaçao, Lemon peelOld Fashioned — Bourbon, Bitters, Sugar, Lemon peelBattle of New Orleans — Bourbon, Peychaud's Bitters, Absinthe, Orange bitters, Simple syrupImproved Whiskey Cocktail — Bourbon, Bitters, Maraschino Liqueur, Absinthe, Simple syrup, Lemon peelDerby Cocktail — Bourbon, Bénédictine, BittersMother-In-Law — Bourbon, Orange Curaçao, Maraschino Liqueur, Peychaud's Bitters, Bitters, Torani Amer, Simple syrupMint Julep — Bourbon, Rich demerara syrup 2:1, MintThe Journey — Bourbon, Mezcal, Hazelnut liqueurBenton's Old Fashioned — Bourbon, Bitters, Grade B maple syrup, Orange peelFancy Mint Julep — Bourbon, Simple syrup, Mint, Fine sugar\n\nComments\n\n\n\n\n\nLog in or register to post comments\n\n\n\n\n\n\n\n\n© 2010-2023 Dan Chadwick. Kindred Cocktails™ is a trademark of Dan Chadwick."""

assert extract_cocktail(cocktail) == Cocktail(

name="Black Rose",

ingredients=["2 oz Bourbon", "1 ds Grenadine", "2 ds Peychaud's Bitters", "1 Lemon peel (flamed, for garnish)"],

instructions="Fill an old-fashioned glass three-quarters full with ice. Add the bourbon, grenadine, and bitters, and stir. Garnish with the lemon peel.",

similar=["New Orleans Cocktail", "Old Fashioned", "Battle of New Orleans", "Improved Whiskey Cocktail", "Derby Cocktail", "Mother-In-Law", "Mint Julep", "The Journey", "Benton's Old Fashioned", "Fancy Mint Julep"],

)

More specifically, this align statement ensures that:

- The

nameis properly parsed (i.e. remove "| Kindred Cocktails") - The list of

similarcocktails only contains the cocktail names and not the ingredients

With these align statements, the resulting output when scraping and processing the "old-fashioned" cocktail recipe is:

Cocktail(

name='Old Fashioned',

ingredients=['3 oz Bourbon (or rye)', '1 cube Sugar (or 1 tsp simple syrup)', '3 ds Bitters, Angostura', '1 twst Lemon peel (as garnish)'],

instructions='Wet sugar cube with bitters and a dash of soda or water in an old fashioned glass, muddle, add ice and whiskey, stir to dissolve thoroughly, garnish',

similar=["Benton's Old Fashioned", 'Improved Whiskey Cocktail', 'Battle of New Orleans', 'Mint Julep', 'Black Rose', 'Mother-In-Law', 'Fancy Mint Julep', 'The Earhart', 'The Journey', 'Chai-Town Brown']

)

Another use case for scraping an entire page is shared in the example repo here for extracting car specifications.

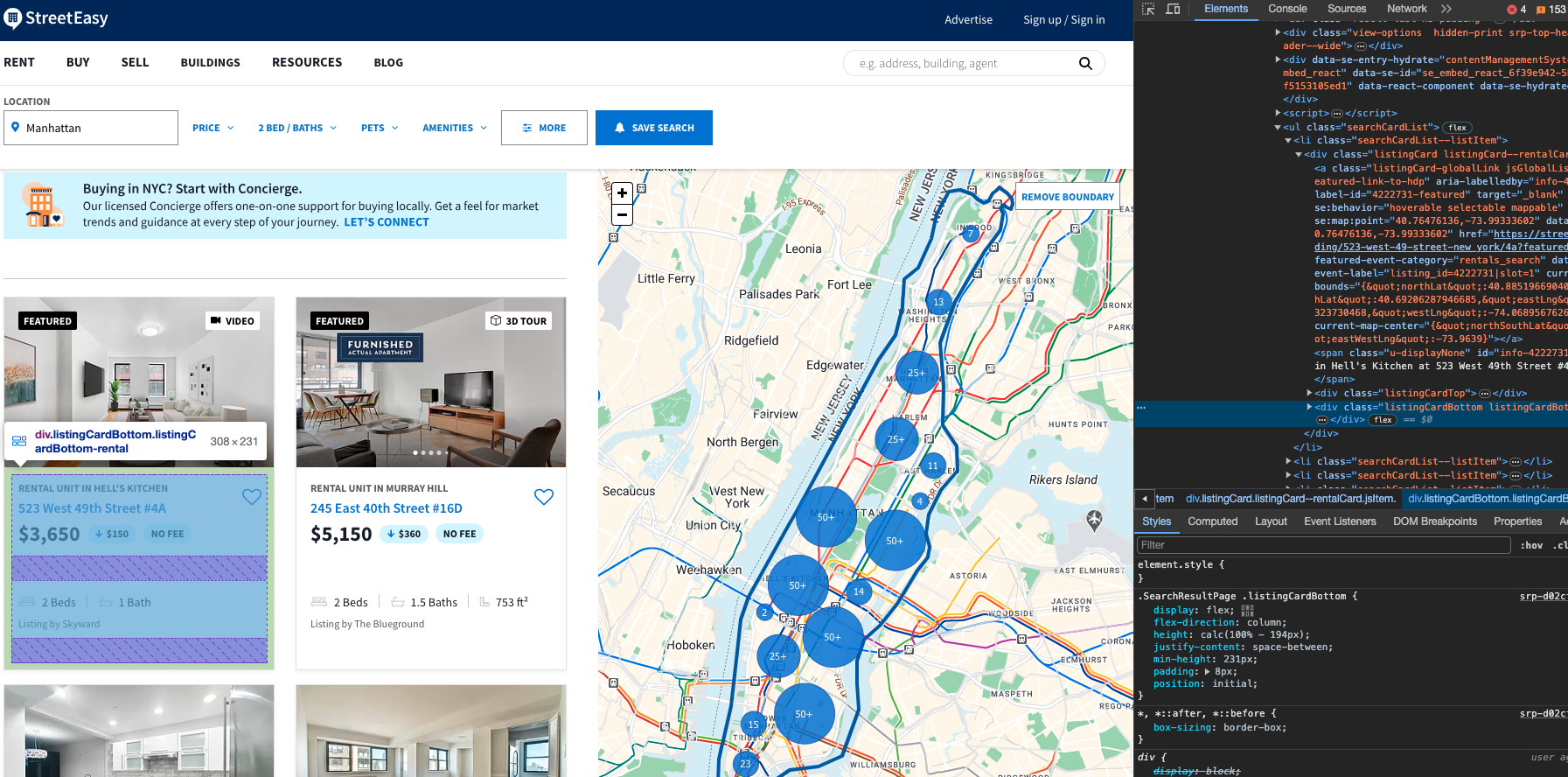

Example: Rental units with a user-agent

Our third example uses Tanuki to extract rental property listings from StreetEasy, a rental marketplace catered to New York City apartment units. As seen in the image below, the webpage organizes the rental property information into a div element of class name "listBottomCard."

We must first specify a User-Agent header before we can successfully scrape and process this page. To do so, make sure to populate your USER_AGENT environmental variable in your .env file with an appropriate user-agent value. For example:

USER_AGENT="Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML%2C like Gecko) Chrome/97.0.4692.99 Safari/537.36 OPR/83.0.4254.62"

Let's now define the @tanuki.patch function and associated Property class data structure. The text from each "listBottomCard" div element will be passed to this method.

class Property(BaseModel):

neighborhood: str

address: str

price: float

fee: bool

beds: float

bath: float

listed_by: str

@tanuki.patch

def extract_property(content: str) -> Optional[Property]:

"""

Examine the content string and extract the rental property details for the neighborhood, address,price, number of beds, number of bathrooms, square footage, and company that is listing the property.

"""

Let's also define a @tanuki.align statement to condition the response. In particular, this is needed to ensure the boolean return response for whether or not a property has an accompanying broker fee.

@tanuki.align

def align_extract_property() -> None:

unit_one = "Rental Unit in Lincoln Square\n \n\n\n229 West 60th Street #7H\n\n\n\n$7,250\nNO FEE\n\n\n\n\n\n\n\n\n2 Beds\n\n\n\n\n2 Baths\n\n\n\n\n\n 1,386\n square feet\nft²\n\n\n\n\n\n Listing by Algin Management"

assert extract_property(unit_one) == Property(

neighborhood="Lincoln Square",

address="229 West 60th Street #7H",

price=7250.0,

fee=False,

beds=2.0,

bath=2.0,

listed_by="Algin Management",

)

Putting it all together, the resulting output for a single property is:

Property(

neighborhood='Lenox Hill',

address='201 E 69th Street #14O',

price=7395.0,

fee=False,

beds=2.0,

bath=2.0,

listed_by='TF Cornerstone'

)

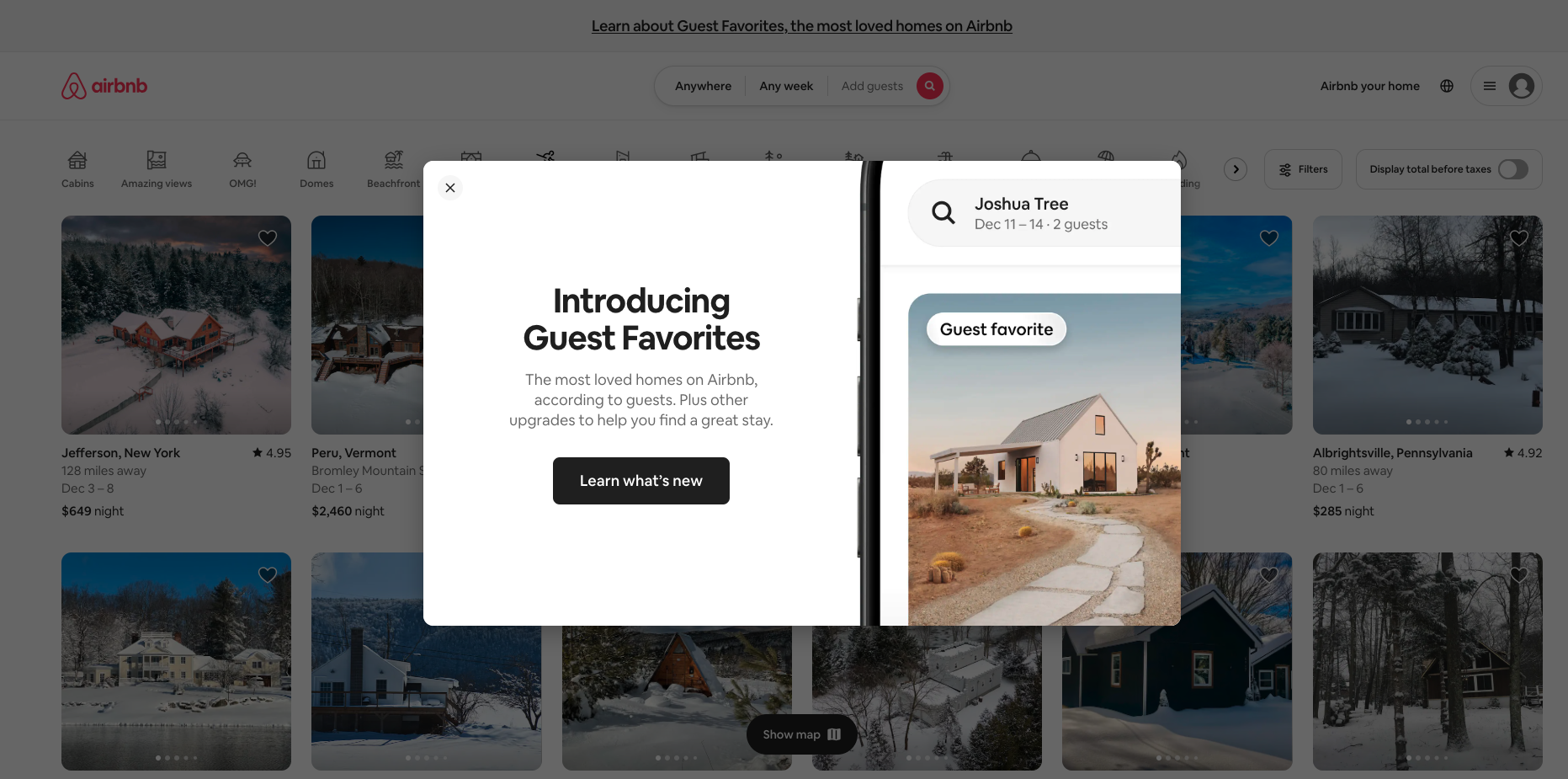

Example: Airbnb listings using Selenium webdriver

Our fourth example uses Tanuki to extract Airbnb listing information for nearby ski lodges. Scraping this information from the Airbnb webpage is a bit more complex, as a dialog modal element greets the user upon page load (see image below). This serves to block a basic http request from scraping the page content. Further, only half of the listings are rendered upon initial page load, requiring the user to scroll halfway down the page to trigger rendering of the remaining listings.

Accessing the Airbnb listings requires browser automation, which is precisely where Selenium comes into play. Selenium is a convenient framework to scrape information from a web page that requires JavaScript to be executed. Selenium uses a web browser to navigate to web pages, and thus execute all the JavaScript an return content that may not be available with simple HTTP requests.

The following code uses Selenium to access and extract the page source for the Airbnb listings. This function automates the process of loading the Airbnb search page, removing the modal dialog, scrolling to load more content, and retrieving the page source, making it ready for further parsing or analysis. To execute this function in headless mode (i.e. without the GUI launching), uncomment the two lines that specify options.

def selenium_driver() -> str:

"""Use selenium to scrape the airbnb url and return the page source."""

# configure webdriver

options = Options()

# options.add_argument('--headless') # Enable headless mode

# options.add_argument('--disable-gpu') # Disable GPU acceleration

# launch driver for the page

driver = webdriver.Chrome(options=options)

driver.get("https://www.airbnb.com/?tab_id=home_tab&refinement_paths%5B%5D=%2Fhomes&search_mode=flex_destinations_search&flexible_trip_lengths%5B%5D=one_week&location_search=MIN_MAP_BOUNDS&monthly_start_date=2023-12-01&monthly_length=3&price_filter_input_type=0&channel=EXPLORE&search_type=category_change&price_filter_num_nights=5&category_tag=Tag%3A5366")

time.sleep(3)

# refresh the page to remove the dialog modal

driver.refresh()

time.sleep(3)

# Scroll halfway down page to get rest of listings to load

scroll_position = driver.execute_script("return (document.body.scrollHeight - window.innerHeight) * 0.4;")

driver.execute_script(f"window.scrollTo(0, {scroll_position});")

time.sleep(3)

# extract the page source and return

page_source = driver.page_source

driver.quit()

return page_source

The page_source returned from the above function can be processed with BeautifulSoup using the same strategy as before to extract all div elements that share the class name "dir dir-ltr". Note that additional processing is required to remove invalid entries.

# Selenium driver to scrape the url and extract the airbnb information

page_source = selenium_driver()

# Beautiful Soup to parse the page source

soup = BeautifulSoup(page_source, 'html.parser')

entities = soup.find_all('div', class_="dir dir-ltr")

# Remove entries that are not airbnb listings

contents = [entity.text for entity in entities if entity.text != ""]

contents = [c for c in contents if "$" in c]

At last, we can call Tanuki using the @tanuki.patch decorated function below to process the listing blurbs into the desired AirBnb class data structure.

class AirBnb(BaseModel):

city: str

state: str

dates: str

price: float

stars: float

@tanuki.patch

def extract_airbnb(content: str) -> Optional[AirBnb]:

"""

Examine the content string and extract the airbnb details for the city, state,

dates available, nightly price, and stars rating.

"""

Putting it all together, the resulting output for a single listing is:

AirBnb(

city='Peru',

state='Vermont',

dates='Dec 1 – 6',

price=2460.0,

stars=4.95

)