AI functions in a nutshell

In early 2023, we started to see the emergence of “AI functions”, such as this post by Databricks. Although the exact definition is still being crystallized, we wanted to explain the high-level concept of AI functions and the motivation behind them.

In Python, functions are fundamental building blocks of software that can be characterized as 1) formally-defined (in input, output, and behavior), 2) modular, 3) easy to implement and invoke, 4) having variable time and memory complexity.

Large language models (LLMs) on the other hand are 1) ill-defined (prompt wrangling is required and outputs can be very different); 2) particularistic (high inter-model variability); 3) difficult to call in-code; 4) constant execution latency and memory consumption. However, they are incredibly powerful and can perform difficult tasks without requiring direct implementation details from the application developer i.e. classifying 1000s of emails; generating poems; extracting key insights from a Tweet.

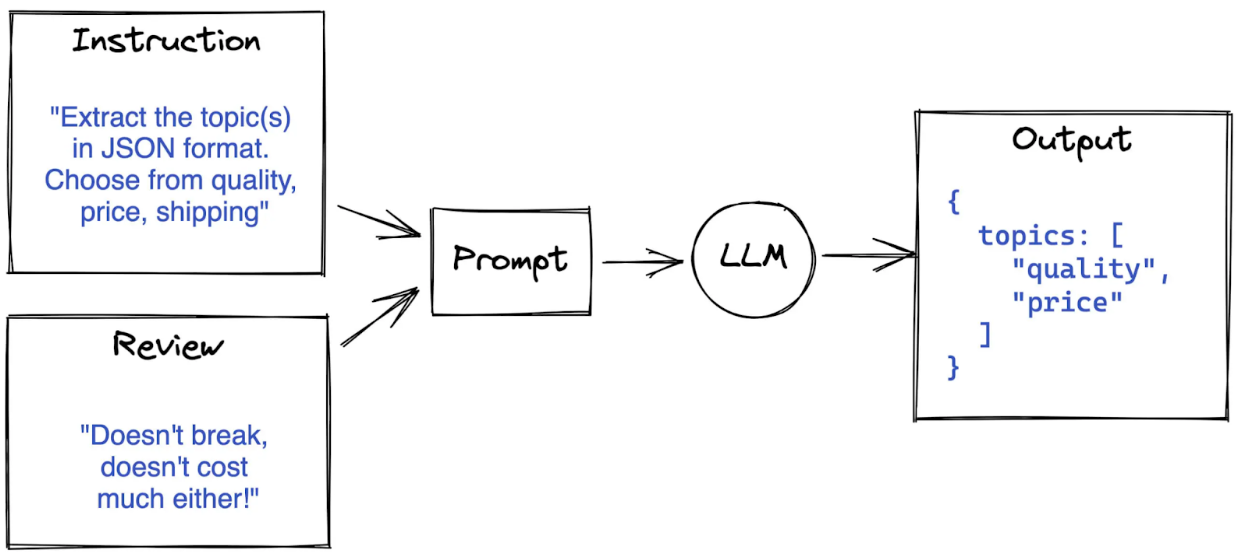

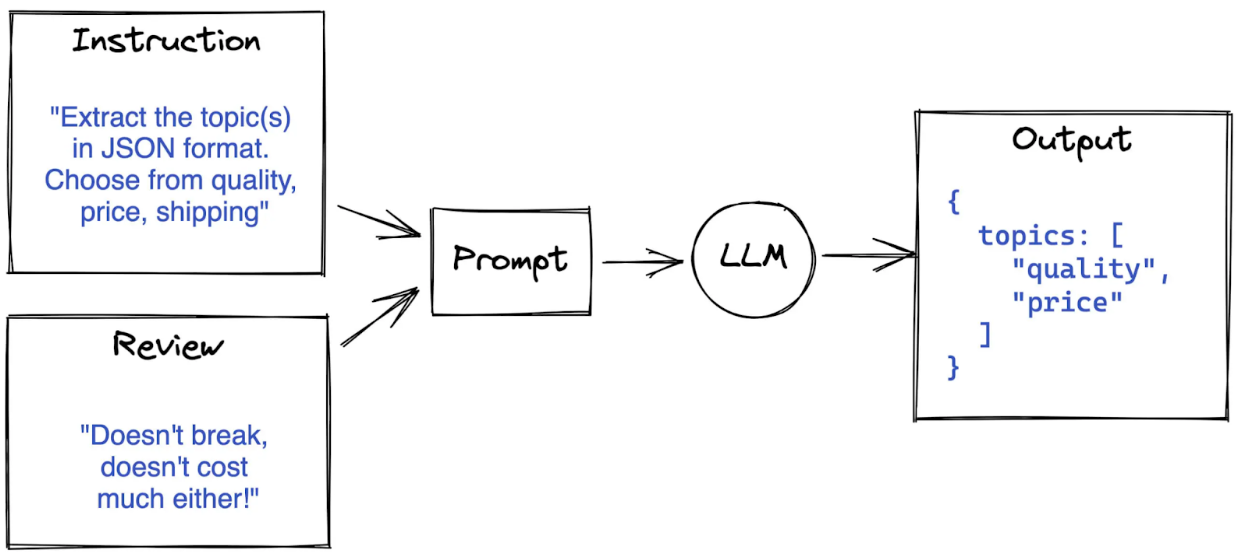

AI functions are a way of combining the structure and definition of classic functions with the power and versatility of LLMs, enabling developers to create AI-native software. In practice, an AI function is the regularization of input and output to an LLM, so that such a function can be called in code and behave just like a normal function, but without any code supplied by the developer.